OpenAI's GPT-3 Will Change How We Build, With or Without It

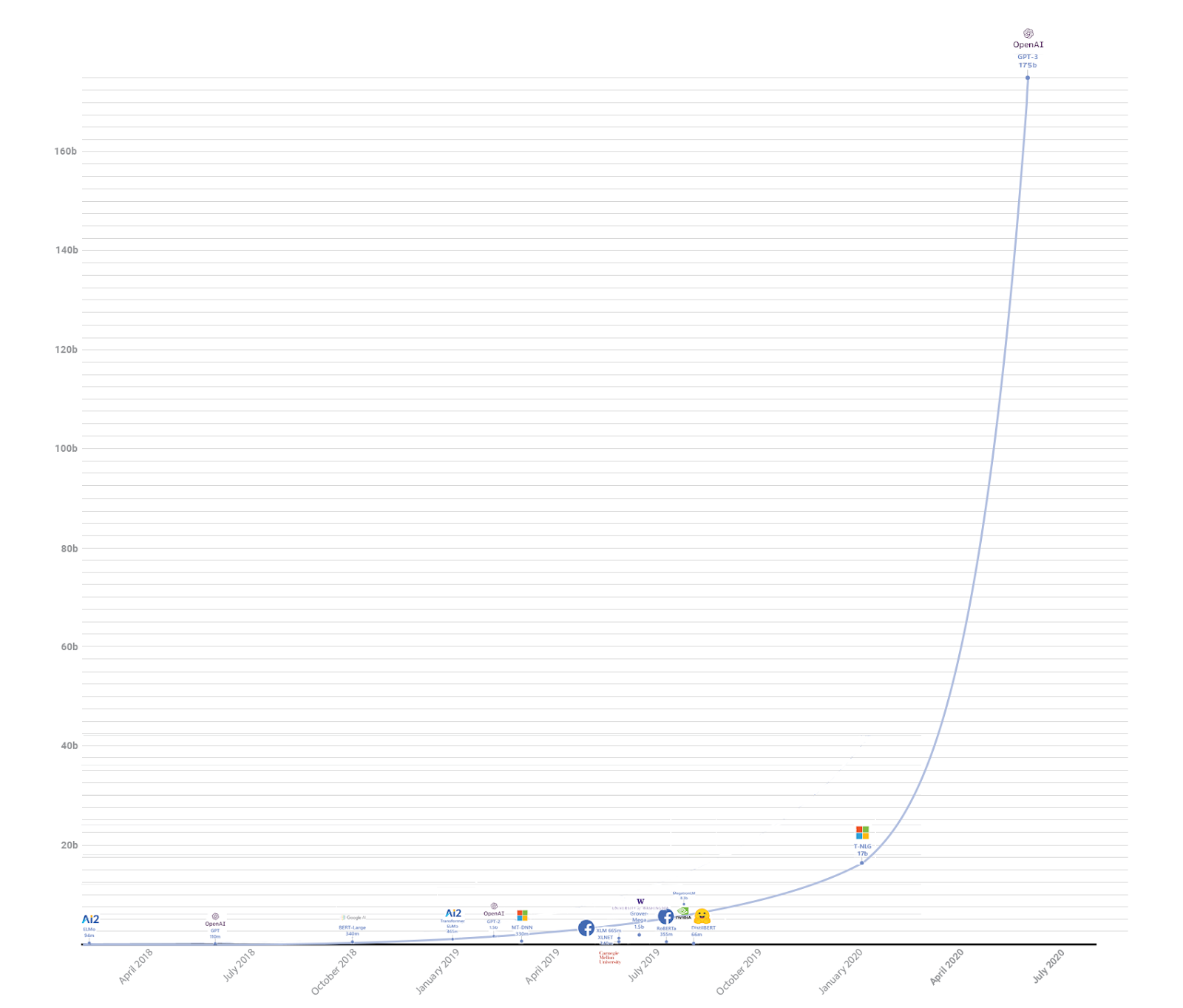

Image Credit: Leo Gao

For years, there have been warnings that AI is going to eliminate routine, manual jobs. While this hasn’t yet come to fruition, the mood about AI and job elimination seemed to change this past week. GPT-3, an AI model that performs next-word prediction, plastered Twitter feeds with examples of how AI could not only replace manual workers, but knowledge workers as well.

I’m a skeptic. I don’t think AI will completely displace workers anytime soon. However, I also think there are valuable lessons in how AI models like GPT-3 generate novel content. These learnings can help us in our work now. AI such as GPT-3 may soon augment some of the product creation process, but the biggest help it provides is in showing us how to be better thinkers, and thus, build better products.

What is GPT-3?

The scientific paper describing GPT-3 was released back in June, and the API was made available in a limited, private beta this past week. And, for those of you who don’t spend all your time on Twitter, GPT-3 made waves with examples of how it could create websites from natural language, write product requirements based on a problem statement, and create novel tweets.

GPT-3 is an AI model developed by OpenAI. It is the third generation of the Generative Pretrained Transformer (GPT) models. GPT models are a type of machine learning trained with a large, diverse corpus of text. These models are not given a variable to predict during training—as is the case with supervised machine learning models—and are used to predict the best next word given a section of text. Because the performance of these models is highly dependent on the amount of data consumed during the training phase, the team at OpenAI upped the training set two orders of magnitude between GPT-2 and GPT-3. The training corpus for GPT-3 is essentially the entirety of the open internet as of October 2019.

How does it work?

One important characteristic of GPT-3 is that it requires priming to work. Priming is when you expose someone (or something) to a specific stimulus that then results in the identification of other things that were previously unnoticed. Here’s an example:

Imagine you are in the market for a new car. You want to be original but not too flashy, so you start looking for a Volvo. You poke around on Carvana, Volvo.com, and a few other car sites before deciding on a model you like. You still don’t feel quite ready to buy, so you put it on the back burner and hop in the car to get lunch. On your way to the fast-food joint down the street, you notice the exact car you were just looking at online! “What a coincidence!” you think to yourself. “I never see any Volvos out on the road, let alone the exact model I was just looking at.” Throughout the rest of your trip, you notice four more Volvos. This makes you wonder if you were original at all by assuming a Volvo was a less-than-popular car.

I am sure this has happened to you, if not a car then with something else. Nobody read your search history and bought a bunch of Volvos just to mess you with you. You just weren’t looking for them before. This is priming. Your initial stimulus was the decision that a Volvo is a fairly uncommon car so you looked at a few online. It’s only after this stimulus that you recognize there are more Volvos on the road than you thought (In fact, I bet the next time you are out driving on the road you will notice a Volvo. You’ve been primed by reading this example!).

Just like you need priming to recognize something you haven’t noticed before, GPT-3 needs priming to generate new content that hasn’t been seen before. The priming is done by providing a prompt as an input to the model. This could be the beginning part of an essay, a question, or even a partial block of code. All GPT-3 will do is determine what the best next word(s) is to complete your prompt and repeat that process until you tell it to stop.

Primers to Building

While GPT-3 is certainly an amazing step-change in the functionality of AI, it isn’t going to replace the generation of new product ideas anytime soon. The biggest reason is that it needs an effective primer to build anything novel. You need to see the world in a unique way to create a primer that generates a meaningful new insight. GPT-3 may become a very useful tool for product managers: changing how they write requirements, discover missing features, and validate new market opportunities. But above all, I believe it best serves as an example of how creating better primers helps us build better products.

The hardest phase of building any new product is making the decisions about novel features. These are the parts that are at the core of solving the customer’s problem. Often we try to do this by brainstorming with our colleagues. You stand next to a blank whiteboard, your colleagues staring back at you, waiting for someone to have an idea. When they finally say something, it seems fairly predictable, lacking any true ingenuity and creativity. Though this may be a common experience during brainstorming with others or by yourself, the thing that takes your decision making from mediocre to amazing is a good primer.

There are some basic things we can learn about creating primers by studying the types of primers that result in quality GPT-3 outputs. Just like GPT-3 needs a primer to generate output, product managers need a primer to create novel and valuable solutions to their customer’s problems.

1. The Medium Of Your Primer Matters

For GPT-3 to generate a novel bit of code, it needs partially complete code as the primer. To generate an answer, a question must be the primer. The generated output will match the format of the primer.

Our minds work in the same way. Some of the world’s best writers spend an inordinate amount of time reading. Reading is the same medium as writing (written language) and thus provides an effective primer to generating new ideas through writing. Reading not only helps you generate and realize new ideas to write about, but it also helps you become a better writer.

In the same way, artists paint scenes from the world around them. Their environment and the visual imagery they immerse themselves in inspires future works of art.

So what type of medium should product managers consume if they want to build better products? It depends on the task. If you are trying to generate new ideas for how to display data on a webpage, scour the internet for dashboards and data formatting tools. Take note of how they display information and use that as inputs to your design.

If you are trying to create a completely new product idea, you should be looking at niche and novel products that uniquely solve other problems. When doing this, look at the ways the product solves the user’s core problem. These nuggets of inspiration will get your mind primed for generating novel techniques to solve your user’s problems, even if it is a completely different domain.

Trying to convert from one medium to another adds cognitive load. Lower the activation energy of these new ideas by using a primer that matches the expected medium of your output.

2. Your Primer Should be Something Directional

But having a primer that matches the medium of your output isn’t enough. The primer must also direct your thinking around the specific problem you are trying to solve. Priming GPT-3 with something that is too open-ended and ambiguous results in aimless rambling unrelated to the user’s intended output. And, you guessed it, our brains work similarly. This is why writer’s block exists. You are staring at a blank page, unsure of what to write because there are too many options for what could come next.

When trying to design a new website, you don’t want to start with a blank browser shell. This provides too little direction. Instead, collect examples of elements you think look nice, choose a color scheme, and decide on a set of basic needs. Creating this palate of ideas will help you create something more valuable because it provides enough direction to get you moving and enough ambiguity to allow your creativity to shine.

A highly effective way to provide directionality in your primer is to leave an idea incomplete, even if you think you know the best way to solve it. Leaving a problem half solved allows your mind to wander, thinking about all the possible ways to complete the half-baked solution. Because our minds don’t like the cognitive dissonance of an incomplete problem, it is forced to think of ways to solve it.

3. Consume the Output of Your Work as an Input to the Next

If you are creating a feature list of what your product can do, use each new feature as an input to the next. This is something that differentiates the human ability to generate unique and cohesive ideas from models such as GPT-3. GPT-3 cannot understand what the previous inputs mean, only that they are a collection of inputs to generate an output. When you are building a list of features, you can understand how one might impact another, or how a basic feature could inform more complex workflows downstream.

The other thing humans can do that GPT-3 cannot is change the inputs. You can see how a design choice made previously acts as a poor primer to drive further development in other parts of the application. Changing the upstream design has large downstream consequences, both good and bad. Don’t be afraid to tinker with decisions that were made previously to see how they change the later outputs.

4. The Quality of Your Primer Matters

Writers don’t get better at writing by reading crappy books. Painters don’t get better at painting by looking at bland landscapes. Product Managers don’t get better at building products by studying boring solutions.

A primer that is both the appropriate medium and provides clear direction isn’t enough to ensure great output. The quality of the primer must also match the quality of the desired output. For product managers, this means studying highly creative and successful products (both niche and mainstream).

Increasing the quality of your inputs also requires a wider breadth of industries as inspiration. If healthcare companies never lifted their head to look at how other industries were managing data, electronic medical records would not exist. You should take time to lift your head, look out at other industries that have a similar core problem to yours, and take inspiration from the products that are solving it best.

Low-quality primers lead to low-quality outputs. But the inverse is also true. Always ask yourself if you are using the highest quality primers for your own thinking. If not, search for where can the highest quality inspiration be found. Only use the best possible primers for your product, it is the easiest way to increase the quality of your output.

TL;DR: With GPT-3 or Without It

Models such as GPT-3 may have a profound impact on how we design, build, and create new products. The future is bright and the possibilities endless for how this type of model could be applied. Whether or not GPT-3 truly has the capability to replace some knowledge workers is yet to be proven. Though while the applications begin to appear, we should take advantage of how these models can generate so much novel content. Identifying what makes a great primer and applying that to the way we think and build could have a profound impact on our ability to create.

Here’s how we can apply what makes GPT-3 great to our current product management style:

1) The medium of your primer should match your expected output medium

2) Your primer should be directional

3) You should consume the output of your work as a primer to any future work

4) High-quality primers lead to high-quality outputs

However, as we learn more about these generative models and what makes them work most effectively, we should adapt ourselves. The things that make the best primers for GPT-3 also make great primers for us.

Thank you to Josh Mitchell and Ross Gordon for reviewing this post.